One of the most annoying things about dealing with Solaris zones deployed using OEDA is that all VM’s get deployed into a single LUN. What this means is even though your database hosts may be “isolated” from each other, they all depend on one top level ZFS dataset for their root and software mounts/partitions.

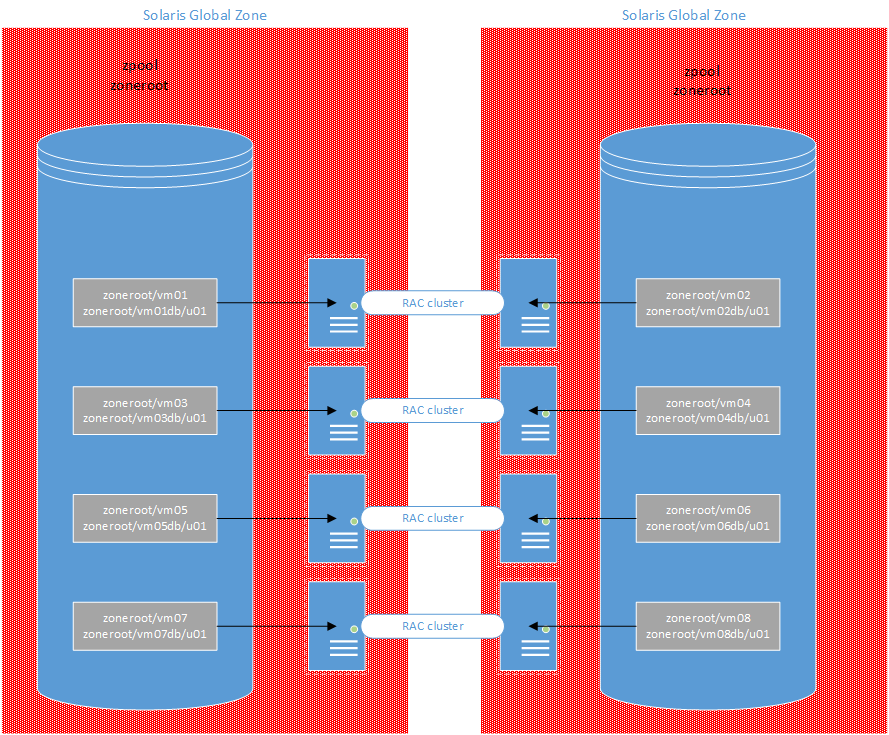

In the example below we see two Global zones with a zpool name zoneroot and 4 exavm clusters.

This setup is less than ideal for many reason but mainly data corruption at the pool level affects more than just one VM and this severely limits our flexibility.

My Idea is too move each VM into it’s own dedicated LUN. This does require at outage at the VM layer, but can be done RAC rolling.

To do this I will create a new zpool on a new LUN or device. I’ll then take a snapshot of all the datasets related to the zone and then do a zfs send to the new zpool

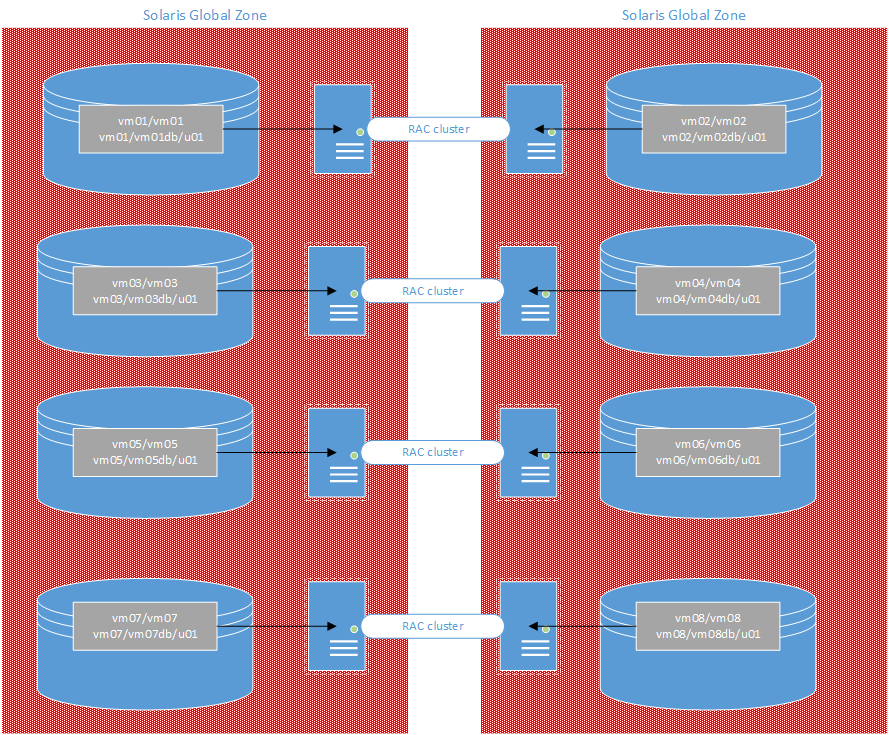

Below is an example of the desired state.

In this real world example I’ll be separating ssexavm (Super Secret exadata VM 😀 ) and usw1devzeroadm from the zoneroot pool.

I’ve previously prepared LUNs on the ZFS storage appliance and made them visible to each global zone.

I start off by shutting down the cluster and then the shutting down and detaching the zone.

root@usw1zadm03:~# zlogin ssexavm02 [Connected to zone 'ssexavm02' pts/24] Last login: Thu Jun 14 09:19:01 2018 on pts/24 Oracle Corporation SunOS 5.11 11.3 June 2017 root@ssexavm02:~# . oraenv ORACLE_SID = [root] ? +ASM2 The Oracle base has been set to /u01/app/grid root@ssexavm02:~# crsctl stop crs CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'ssexavm02' ---snip--- CRS-4133: Oracle High Availability Services has been stopped. root@ssexavm02:~# exit logout [Connection to zone 'ssexavm02' pts/24 closed] root@usw1zadm03:~# zoneadm -z ssexavm02 shutdown root@usw1zadm03:~# zoneadm -z ssexavm02 detach

Next, get a listing of the zpools and a recursive list of the datasets so that the relevant snapshots can be made to send.

root@usw1zadm03:~# zpool list NAME SIZE ALLOC FREE CAP DEDUP HEALTH ALTROOT rpool 556G 172G 384G 30% 1.00x ONLINE - zoneroot 310G 51.4G 259G 16% 1.00x ONLINE - root@usw1zadm03:~# zfs list -r zoneroot NAME USED AVAIL REFER MOUNTPOINT zoneroot 51.4G 254G 33K /zoneroot zoneroot/ssexavm02 3.75G 254G 32K /zoneroot/ssexavm02 zoneroot/ssexavm02/ssexavm02 3.75G 41.3G 36K /zoneHome/ssexavm02 zoneroot/ssexavm02/ssexavm02/rpool 3.75G 41.3G 31K /rpool zoneroot/ssexavm02/ssexavm02/rpool/ROOT 3.74G 41.3G 31K legacy zoneroot/ssexavm02/ssexavm02/rpool/ROOT/solaris-0 3.74G 41.3G 3.49G / zoneroot/ssexavm02/ssexavm02/rpool/ROOT/solaris-0/var 264M 41.3G 264M /var zoneroot/ssexavm02/ssexavm02/rpool/VARSHARE 1.23M 41.3G 1.17M /var/share zoneroot/ssexavm02/ssexavm02/rpool/VARSHARE/pkg 63K 41.3G 32K /var/share/pkg zoneroot/ssexavm02/ssexavm02/rpool/VARSHARE/pkg/repositories 31K 41.3G 31K /var/share/pkg/repositories zoneroot/ssexavm02/ssexavm02/rpool/export 63K 41.3G 32K /export zoneroot/ssexavm02/ssexavm02/rpool/export/home 31K 41.3G 31K /export/home zoneroot/ssexavm02/ssexavm02DB 62K 254G 31K /zoneroot/ssexavm02/ssexavm02DB zoneroot/ssexavm02/ssexavm02DB/u01 31K 120G 31K legacy zoneroot/usw1devzeroadm02 47.7G 254G 32K /zoneroot/usw1devzeroadm02 zoneroot/usw1devzeroadm02/usw1devzeroadm02 6.91G 38.1G 34K /zoneHome/usw1devzeroadm02 zoneroot/usw1devzeroadm02/usw1devzeroadm02/rpool 6.91G 38.1G 31K /zoneHome/usw1devzeroadm02/root/rpool zoneroot/usw1devzeroadm02/usw1devzeroadm02/rpool/ROOT 6.91G 38.1G 31K legacy zoneroot/usw1devzeroadm02/usw1devzeroadm02/rpool/ROOT/solaris-0 6.91G 38.1G 4.32G /zoneHome/usw1devzeroadm02/root zoneroot/usw1devzeroadm02/usw1devzeroadm02/rpool/ROOT/solaris-0/var 2.58G 38.1G 2.58G /zoneHome/usw1devzeroadm02/root/var zoneroot/usw1devzeroadm02/usw1devzeroadm02/rpool/VARSHARE 1.23M 38.1G 1.17M /zoneHome/usw1devzeroadm02/root/var/share zoneroot/usw1devzeroadm02/usw1devzeroadm02/rpool/VARSHARE/pkg 63K 38.1G 32K /zoneHome/usw1devzeroadm02/root/var/share/pkg zoneroot/usw1devzeroadm02/usw1devzeroadm02/rpool/VARSHARE/pkg/repositories 31K 38.1G 31K /zoneHome/usw1devzeroadm02/root/var/share/pkg/repositories zoneroot/usw1devzeroadm02/usw1devzeroadm02/rpool/export 157K 38.1G 32K /zoneHome/usw1devzeroadm02/root/export zoneroot/usw1devzeroadm02/usw1devzeroadm02/rpool/export/home 125K 38.1G 34K /zoneHome/usw1devzeroadm02/root/export/home zoneroot/usw1devzeroadm02/usw1devzeroadm02/rpool/export/home/grid 47K 38.1G 47K /zoneHome/usw1devzeroadm02/root/export/home/grid zoneroot/usw1devzeroadm02/usw1devzeroadm02/rpool/export/home/oracle 44K 38.1G 44K /zoneHome/usw1devzeroadm02/root/export/home/oracle zoneroot/usw1devzeroadm02/usw1devzeroadm02DB 40.8G 254G 31K /zoneroot/usw1devzeroadm02/usw1devzeroadm02DB zoneroot/usw1devzeroadm02/usw1devzeroadm02DB/u01 40.8G 59.2G 40.8G legacy root@usw1zadm03:~#

So the datasets in blue are the ones I want to move. So I’ll do a recursive snapshot at zoneroot/ssexavm02 which will snapshot all the way down to zoneroot/ssexavm02/ssexavm02DB/u01

Once I have those snapshots, I can create the new zpool and then recursively send zoneroot/ssexavm02@ss and receive it into newpoolname/ssexavm02. This will result in an error simply because the data has been duplicated and the mount points are the same.

root@usw1zadm03:~# zfs snapshot -r zoneroot/ssexavm02@ss root@usw1zadm03:~# zpool create ssexavm02 c0t600144F0A18742F800005B215AAD0004d0 root@usw1zadm03:~# zfs send -r zoneroot/ssexavm02@ss | pv | zfs recv ssexavm02/ssexavm02 3.86GB 0:01:55 [34.3MB/s] [ <=> ] cannot mount 'ssexavm02/ssexavm02/ssexavm02' on '/zoneHome/ssexavm02': directory is not empty root@usw1zadm03:~# zfs list -r ssexavm02 NAME USED AVAIL REFER MOUNTPOINT ssexavm02 3.75G 290G 32K /ssexavm02 ssexavm02/ssexavm02 3.75G 290G 32K /ssexavm02/ssexavm02 ssexavm02/ssexavm02/ssexavm02 3.75G 41.3G 36K /zoneHome/ssexavm02 ssexavm02/ssexavm02/ssexavm02/rpool 3.75G 41.3G 31K /rpool ssexavm02/ssexavm02/ssexavm02/rpool/ROOT 3.74G 41.3G 31K legacy ssexavm02/ssexavm02/ssexavm02/rpool/ROOT/solaris-0 3.74G 41.3G 3.49G / ssexavm02/ssexavm02/ssexavm02/rpool/ROOT/solaris-0/var 264M 41.3G 264M /var ssexavm02/ssexavm02/ssexavm02/rpool/VARSHARE 1.23M 41.3G 1.17M /var/share ssexavm02/ssexavm02/ssexavm02/rpool/VARSHARE/pkg 63K 41.3G 32K /var/share/pkg ssexavm02/ssexavm02/ssexavm02/rpool/VARSHARE/pkg/repositories 31K 41.3G 31K /var/share/pkg/repositories ssexavm02/ssexavm02/ssexavm02/rpool/export 63K 41.3G 32K /export ssexavm02/ssexavm02/ssexavm02/rpool/export/home 31K 41.3G 31K /export/home ssexavm02/ssexavm02/ssexavm02DB 62K 290G 31K /ssexavm02/ssexavm02/ssexavm02DB ssexavm02/ssexavm02/ssexavm02DB/u01 31K 120G 31K legacy root@usw1zadm03:~# zfs list -r zoneroot NAME USED AVAIL REFER MOUNTPOINT zoneroot 51.4G 254G 33K /zoneroot zoneroot/ssexavm02 3.75G 254G 32K /zoneroot/ssexavm02 zoneroot/ssexavm02/ssexavm02 3.75G 41.3G 36K /zoneHome/ssexavm02 zoneroot/ssexavm02/ssexavm02/rpool 3.75G 41.3G 31K /rpool zoneroot/ssexavm02/ssexavm02/rpool/ROOT 3.74G 41.3G 31K legacy zoneroot/ssexavm02/ssexavm02/rpool/ROOT/solaris-0 3.74G 41.3G 3.49G / zoneroot/ssexavm02/ssexavm02/rpool/ROOT/solaris-0/var 264M 41.3G 264M /var zoneroot/ssexavm02/ssexavm02/rpool/VARSHARE 1.23M 41.3G 1.17M /var/share zoneroot/ssexavm02/ssexavm02/rpool/VARSHARE/pkg 63K 41.3G 32K /var/share/pkg zoneroot/ssexavm02/ssexavm02/rpool/VARSHARE/pkg/repositories 31K 41.3G 31K /var/share/pkg/repositories zoneroot/ssexavm02/ssexavm02/rpool/export 63K 41.3G 32K /export zoneroot/ssexavm02/ssexavm02/rpool/export/home 31K 41.3G 31K /export/home zoneroot/ssexavm02/ssexavm02DB 62K 254G 31K /zoneroot/ssexavm02/ssexavm02DB zoneroot/ssexavm02/ssexavm02DB/u01 31K 120G 31K legacy zoneroot/usw1devzeroadm02 47.7G 254G 32K /zoneroot/usw1devzeroadm02 ---snip--- zoneroot/usw1devzeroadm02/usw1devzeroadm02DB/u01 40.8G 59.2G 40.8G legacy

Once the data is copied, it’s time to clean up and make room for the migrated data.

root@usw1zadm03:~# zpool export ssexavm02 <- *NEW DATA root@usw1zadm03:~# zfs destroy -r zoneroot/ssexavm02 <- **DESTROY THE OLD DATA root@usw1zadm03:~# zpool import ssexavm02

Next I need to make a minor change to the u01 filesystem in the zone config because the dataset name has now changed.

root@usw1zadm03:~# zonecfg -z ssexavm02 zonecfg:ssexavm02> select fs dir=/u01 zonecfg:ssexavm02:fs> info fs 0: dir: /u01 special: zoneroot/ssexavm02/ssexavm02DB/u01 <- **OLD DATASET NAME raw not specified type: zfs options: [] zonecfg:ssexavm02:fs> set special=ssexavm02/ssexavm02/ssexavm02DB/u01 <- **New Name zonecfg:ssexavm02:fs> end zonecfg:ssexavm02> commit zonecfg:ssexavm02> exit root@usw1zadm03:~# zoneadm -z ssexavm02 attach Progress being logged to /var/log/zones/zoneadm.20180613T180939Z.ssexavm02.attach Installing: Using existing zone boot environment Zone BE root dataset: ssexavm02/ssexavm02/ssexavm02/rpool/ROOT/solaris-0 ---snip---- Result: Attach Succeeded. root@usw1zadm03:~# zoneadm -z ssexavm02 boot

This concludes the actions of moving data for the ssexavm02 zone. Repeat the same actions on the first node once the cluster is back online.

The next section somewhat different seeing as though usw1devzeroadm02 is the last remaining zone in the zoneroot zpool. Instead of sending the data to a new pool we can just simply rename the pool by exporting and importing it as a different name.

root@usw1zadm03:~# zoneadm -z usw1devzeroadm02 halt root@usw1zadm03:~# zpool export zoneroot root@usw1zadm03:~# zpool import zoneroot usw1devzeroadm02 root@usw1zadm03:~# zpool list NAME SIZE ALLOC FREE CAP DEDUP HEALTH ALTROOT rpool 556G 462G 93.8G 83% 1.00x ONLINE - ssexavm02 298G 30.0G 268G 10% 1.00x ONLINE - usw1devzeroadm02 310G 57.5G 253G 18% 1.00x ONLINE - root@usw1zadm03:~# zonecfg -z usw1devzeroadm02 zonecfg:usw1devzeroadm02> select fs dir=/u01 zonecfg:usw1devzeroadm02:fs> set special=usw1devzeroadm02/usw1devzeroadm02/usw1devzeroadm02DB/u01 zonecfg:usw1devzeroadm02:fs> end zonecfg:usw1devzeroadm02> commit zonecfg:usw1devzeroadm02> exit root@usw1zadm03:~# zoneadm -z usw1devzeroadm02 boot root@usw1zadm03:~# zlogin usw1devzeroadm02